Lately I've been enjoying an anthropological narrative called

People of the Deer

by

Farley Mowat -- the famed and tenacious environmentalist, humanitarian, and defender of true scientific inquiry. In this colorful true-story adventure, first published in 1952, Mowat finds himself drawn, as he so often does, to a place far away in the middle of nowhere in the deep northern territories of Canada. It is here that he befriends and lives among an Eskimo group called the Ilhalmiut and begins to understand how modern encroachment -- namely fur-trapping and government policy -- is negatively affecting the native peoples' ability to live in a place where their ancestors had thrived for thousands of years before them. Mowat writes, quite bluntly, in the foreward of the 1975 edition of the book: "Genocide can be practiced in a variety of ways." Similar to Weston Price, he is not hesitant to place blame on Western culture for the decimation and struggle of the traditional peoples with whom he became intimately acquainted. From the foreword:

We have long prided ourselves on being a democratic nation, dedicated to the altar of freedom. Freedom for whom? If it is only freedom for ourselves to do as we please at the expense of others, then our pious stance is even more abhorrent than that of any overt tyrant -- for ours is based on a vile hypocrisy.

Fat and Deer Hairs

While the book contains many fascinating tidbits, among the most intriguing are Mowat's detailed descriptions of the traditional Ilhalmiut diet and their shifting health as a result of Western influence. When he first arrives at the small settlement of Ilhalmiut, the author is welcomed with a tray of meat that might make any Westerner's stomach churn:

Half a dozen parboiled legs of deer were spread out in a thick gravy which seemed to be composed of equal parts of fat and deer hairs. Bobbing about in the debris were a dozen tongues and, like a cage holding the lesser cuts of meats, there was an entire rib basket of a deer.

Still hungry? There's more!

There were side dishes too ... a skin sack, full of flakes of dry meat ... a smoking bundle of marrow bones ... neatly cracked to so that we would have no trouble extracting the succulent marrow. (p. 82)

Yum.

The cooking varied somewhat, but the food did not. The rule was meat at every meal and nothing else but meat, unless you could count a few well-rotted duck eggs which served as appetizers. To satisfy my curiousity I tried to estimate the quantity of meat Hekwaw [a member of the tribe] put away each day. I discovered he could handle ten to fifteen pounds when he was really hungry... (p. 85)

It doesn't take Mowat long to identify the key ingredient of the Ilhalmiut diet: fat. From his own experience on lean meat for an extended period of time, he describes the vast importance of fat in an all-meat diet through his battle with an affliction which he names, for want of a better term

, mal de caribou,

also known by a great many arctic explorers, prisoners of war, and human carnivores as

rabbit starvation:

... persistent diarrhea was only part of the effect of mal de caribou. I was [also] filled with a sick lassitude, an increasing loss of will to work that made me quite useless ...

Mowat's guide -- a half-Eskimo, half-white man named Franz -- prepared and administered a peculiar remedy:

... he took out a half-pound of precious lard, melted it in a frying pan, and, when it was lukewarm and not yet congealed, he ordered me to drink it. Strangely, I was greedy for it ... I drank a lot of it, then went to bed; and by morning I was completely recovered ... I was suffering from a deficiency of fat and did not realize it. (p. 88)

Death and Disease Among the Ilhalmiut

Concerning the health of the Ilhalmiut people, Mowat goes into extensive historic, anecdotal, and statistical detail while attempting to get at the root of the Northern natives' plight of disease and illness following the arrival of Western culture. It's no secret to those who have studied into the writings and theories of nutritional heroes such as Weston Price, Sir Robert McCarrison, T.L. Cleave, and others that when modern foods such as white flour and sugar are introduced to a traditional culture ill health follows, worsening from generation to generation. Farley Mowat joins the ranks of these great independent thinkers when he waxes sensible, explaining his own theory as to why the people of the far North and other native peoples in history have succumbed to tuberculosis, measles, and small pox:

Perhaps you have heard of the decimation of the forest Indians brought about by disease, by lack of adaptability, by inherent laziness and indolence or by other causes ... you have never heard the truth, for all of these apparent causes are manifestions of the real destroyer, which is -- starvation. If you ask about the thousands of Indians and Eskimos who die each year of tuberculosis, if you ask about the measles and smallpox epidemics which ... have destroyed over one-tenth of the Northern natives ... these people too die of starvation ... (p.91)

Is it just me, or is Mr. Mowat on to something here? He goes on to tell the story of an Inuit tribe he lived with in the winter of 1948, the Idthen Eldeli -- literally meaning "Eaters of the Deer":

In 1860 ... there were about 2000 members of the Idthen ... when the deer moved ... the Idthen people followed after ... [they] anually traveled over a thousand miles through the Barrens.

In the eighteenth century the famous explorer Samuel Hearne journeyed ... with a band of these Indians and he speaks, as do many others, of the almost superhuman endurance and physical capacity of the Idthen people.

In the winter of 1948 when I lived with the Idthen ... they numbered a little over 150 men, women, and children who spent the winters on their scanty trap lines, starving through the cold months until they could fish for life along the opening rivers ... They are a passive, beaten, hopeless people who wait miserably for death. (p. 92)

What could be the instigator of such an unfortunate circumstance? Ol' Farley doesn't mince words:

Starvation first came to them when they began to subsist on a winter diet which now consists of 80 percent white flour, with a very little lard and baking powder, and in summer almost nothing but straight fish. The Idthen people now get little of the red meat and white fat of the deer, once their sole food. Three generations have been born and lived -- or died -- upon a diet of flour bannocks and fish eaten three times a day and washed down with tea. Each of these generations has been weaker and had less "immunity" to disease than the last. (p. 93)

Government aid: giving natives the short end of the stick in America since 1492. It's interesting how what Mowat refers to as starvation can also be seen as a displacement of native foods, as Weston Price pointed out in the 1930s. Either way, the result is lowered immunity and degeneration. Mowat's solution for the dilemma of this "starvation?" Here it is, in characteristic common sense:

Surely there is but one way to cure a man of the diseases which are the products of three generations of starvation, and that is to feed him. (p. 95)

Let them eat meat and fat!

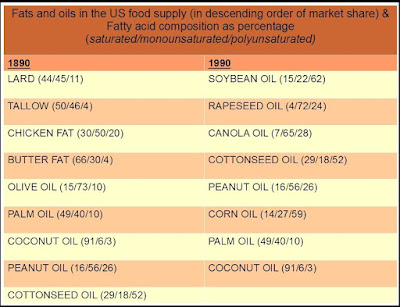

Over the course of the 19th century, as heart disease has increased and cancer has become a common cause of death in the United States, saturated fat consumption has remained quite stable. Monounsaturated fat consumption has increased substantially. Yet this change pales in comparison to the growing popularity of polyunsaturated fat in the American diet. In the 1950s, with polyunsaturated vegetable oils gaining favor by the edible oil industries (whose primary motivation was to make a profit), Americans began eating more and more of these unnatural, man-made fats -- fats which were traditionally only consumed as whole foods, such as grains, vegetables, seeds, and nuts.

Over the course of the 19th century, as heart disease has increased and cancer has become a common cause of death in the United States, saturated fat consumption has remained quite stable. Monounsaturated fat consumption has increased substantially. Yet this change pales in comparison to the growing popularity of polyunsaturated fat in the American diet. In the 1950s, with polyunsaturated vegetable oils gaining favor by the edible oil industries (whose primary motivation was to make a profit), Americans began eating more and more of these unnatural, man-made fats -- fats which were traditionally only consumed as whole foods, such as grains, vegetables, seeds, and nuts. In the above figure, we see that infant mortality rates in 1900 are quite high at 14 %. Correspondingly, average life expectancy of newborns in 1900 is very low at 47.6 years. In 1992, with infant deaths (along with infectious disease, undernourishment, and death from injury) being largely controlled by medical technological advancements, the infant mortality rate drops drastically to less than 1%. For that year, we find that life expectancy has risen by nearly 30 years compared to data from the year 1900.

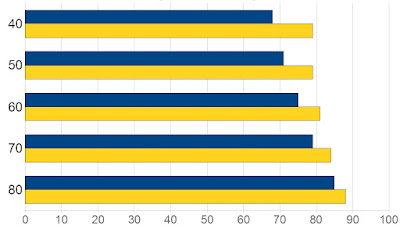

In the above figure, we see that infant mortality rates in 1900 are quite high at 14 %. Correspondingly, average life expectancy of newborns in 1900 is very low at 47.6 years. In 1992, with infant deaths (along with infectious disease, undernourishment, and death from injury) being largely controlled by medical technological advancements, the infant mortality rate drops drastically to less than 1%. For that year, we find that life expectancy has risen by nearly 30 years compared to data from the year 1900..jpg) Here we see that if a white American in 1900 reaches age 40, he/she can expect to live 28 years longer (age 68). A white American in 1992 is expected to live 39 years longer (age 79). This is a difference of 11 years. Furthermore, if the 1900 person should live to age 80, he/she is expected to reach age 85. If the 1992 man lives to 80 years, he can expect to see age 87. This is a difference of 2 years. Thus, it can be seen in the above figure that as the age of the individual increases, the gap between the life expectancy data of 1900 and 1992 diminishes. Returning to the first figure, which is based on newborn (age 0) life expectancies, we find a much larger gap in the data -- a difference of nearly 30 years.

Here we see that if a white American in 1900 reaches age 40, he/she can expect to live 28 years longer (age 68). A white American in 1992 is expected to live 39 years longer (age 79). This is a difference of 11 years. Furthermore, if the 1900 person should live to age 80, he/she is expected to reach age 85. If the 1992 man lives to 80 years, he can expect to see age 87. This is a difference of 2 years. Thus, it can be seen in the above figure that as the age of the individual increases, the gap between the life expectancy data of 1900 and 1992 diminishes. Returning to the first figure, which is based on newborn (age 0) life expectancies, we find a much larger gap in the data -- a difference of nearly 30 years.